We started with the sklearn implementation with preprocessing happening for each class, as this is how the model was initially developed.Here’s a detailed description per development iteration: It turns out that searching for the optimal configuration is not a linear process. This figure outlines the difference among the three configurations: Compare each input vector to a global matrix comprised of all papers in all reviews.

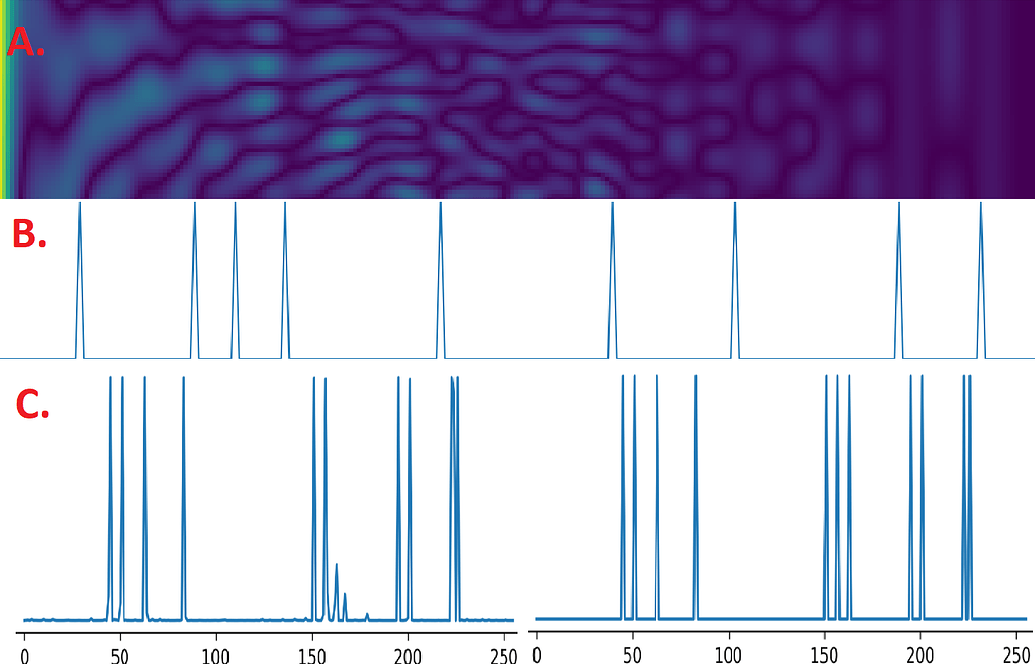

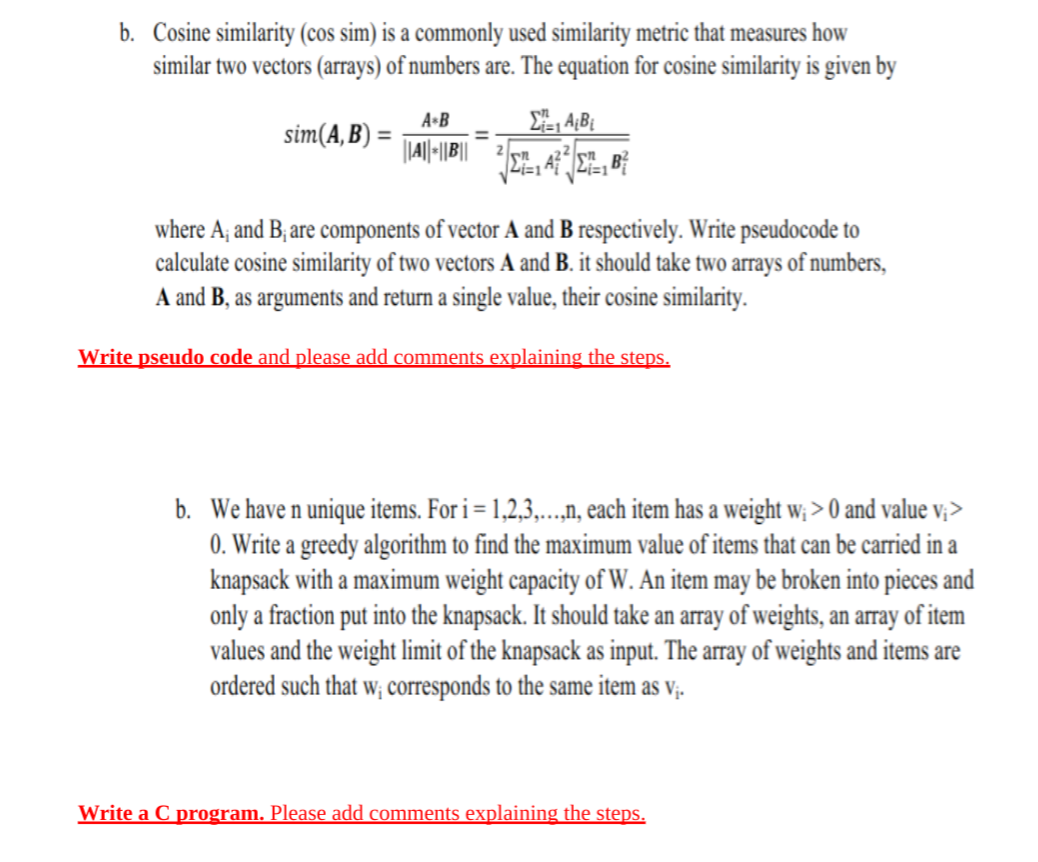

Compare each input vector to a matrix comprised of one review’s papers vectors.Compare each input vector (test paper TF/IDF) to each vector of each paper in each review.In our setting, there are three main options: So one question is how each input matrix is represented. Input representationĬosine similarity is essentially a normalized dot product. Which one is better? The answer isn’t that obvious and relies on input size and representation. The cdist method is implemented entirely in C and cosine-similarity uses numpy operators. Implementation: The implementation in both packages is different.cdist, on the other hand, accepts ndarrays only. Sparsity should be an advantage if the dataset is large. Data representation: In sklearn, the cosine-similarity method can accept a sparse matrix ( csr matrix) that is comprised of a set of input vectors.There are more, but these two are interesting from two main perspectives: We looked at two main implementations: The scikit-learn cosine-similarity and the scipy cdist. Here’s how! Cosine distance implementation After evaluating and benchmarking our system, we concluded that improvements with the most impact would come from representing the input matrices differently and selecting the right cosine distance implementation (point 5). Cosine distance calculation improvements (implementation and input representation)Īs it turns out, significant improvement in the runtime process could be realized.Reducing the number of candidate test papers by using a rule-based logic that detects irrelevant papers (for instance, using other paper attributes like field of study).We had to come up with a way to improve it. In fact, the first time we ran the model as it was developed during research, it took 16 seconds on average to compare one test sample to all reviews, which translates to two weeks of processing for the number of test papers we have. When the number of reviews is large, and the number of papers in each review is also large, this process can take a lot of time. The pseudo-code for inference is the following:

This approach turned out to be more accurate than methods like Universal Sentence Encoder, sciBERT, SIF, FastText and others.įor pre-processing, we used spaCy to perform actions like tokenization, removal of stop words and special characters, and lemmatization. For example, we could look at mean similarity to the 10 closest neighbors. We then aggregate the paper-to-paper similarities to get a similarity metric between the new paper and an entire review. In this setting, we compare the new paper’s TF/IDF vector to TF/IDF vectors of all or some of the papers in each review. One of the most accurate approaches we looked into was TF/IDF on the paper level in each review. Our dataset was comprised of title and abstract per paper. The requirement is to make this process run for no more than a couple hours. In our production setting, we are likely to get between 100,000 to 1.5 million new papers to score. More formally, the problem is the following: In our setting, each review holds a set of papers already collected, and we wish to propose relevant new papers that the researchers might be interested in reviewing and including in their reviews. For more information on the project, see this blog post. Our main objective in this project was to keep reviews up to date by proposing new scientific papers to review owners.

UCL EPPI Centre develops intelligent tools for Systematic Literature Reviews, used by researchers who conduct analyses in many public-health related domains, to come up with evidence-based suggestions for policymakers.

NUMPY COSINE SIMILARITY FULL

This article describes one aspect of a full system developed by the Commercial Software Engineering (CSE) team at Microsoft, together with developers and researchers from University College London’s EPPI Centre. The process usually involves some encoding or embedding of the query, and a similarity measurement (maybe using cosine similarity) between the query and each document. In many NLP pipelines, we wish to compare a query to a set of text documents.

0 kommentar(er)

0 kommentar(er)